Last Friday I had the pleasure of speaking at ThingsCon in Amsterdam, invited by Iskander Smit to join a day exploring this year’s theme of ‘resize/remix/regen’.

The conference took place at CRCL Park on the Marineterrein – a former naval yard that’s spent the last 350 years behind walls, first as the Dutch East India Company’s shipbuilding site (they launched Michiel de Ruyter‘s fleet from here in 1655), then as a sealed military base.

Since 2015 it’s been gradually opening up as an experimental district for urban innovation, with the kind of adaptive reuse that gives a place genuine character.

The opening keynote from Ling Tan and Usman Haque set a thoughtful and positive tone, and the whole day had an unusual quality – intimate scale, genuinely interactive workshops, student projects that weren’t just pinned to walls but actively part of the conversation. The kind of creative energy that comes from people actually making things rather than just talking about making things.

My talk was titled “Back to BASAAP” – a callback to work at BERG, threading through 15-20 years of experiments with machine intelligence.

The core argument (which I’ve made in the Netherlands before…): we’ve spent too much time trying to make AI interfaces look and behave like humans, when the more interesting possibilities lie in going beyond anthropomorphic metaphors entirely.

What happens when we stop asking “how do we make this feel like talking to a person?” and start asking “what new kinds of interaction become possible when we’re working with a machine intelligence?”

I try i the talk to update my thinking here with the contemporary signals around more-than human design and also more-than-LLM approaches to AI, namely so-called “World Models”.

What follows are the slides with my speaker notes – the expanded version of what I said on the day, with the connective tissue that doesn’t make it into the deck itself.

One of the nice things about going last is that you can adjust your talk and slides to include themes and work you’ve seen throughout the day – and I was particularly inspired by Ling Tan and Usman Haque’s opening keynote.

Thanks to Iskander and the whole ThingsCon team for the invitation, and to everyone who came up afterwards with questions, provocations, and adjacent projects I need to look at properly.

Hi I’m Matt – I’m a designer who studied architecture 30 years ago, then got distracted.

Around 20 years ago I met a bunch of folks in this room, and also started working on connected objects, machine intelligence and other things… Iskander asked me to talk a little bit about that!

I feel like I am in a safe space here, so imagine many of you are like me and have a drawer like this, or even a brain like this… so hopefully this talk is going to have some connections that will be useful one day!

So with that said, back to BERG.

We were messing around with ML, especially machine vision – very early stuff – e.g. this experiment we did in the studio with Matt Biddulph to try and instrument the room, and find patterns of collaboration and space usage.

And at BERG we tended to have some recurring themes that we would resize and remix throughout out work.

BASAAP was one.

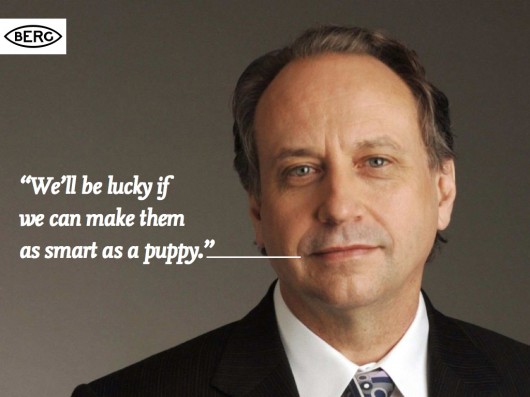

BASAAP is an acronym for Be As Smart As A Puppy – which actually I think first popped into my head while at Nokia a few years earlier.

It alludes to this quote from MIT roboticist and AI curmudgeon Rodney Brooks who said if we get the smartest folks together for 50 years to work on AI we’ll be lucky if we can make it as a smart as a puppy.

I guess back then we thought that puppy-like technologies in our homes sounded great!

We wanted to build those.

Also it felt like all the energy and effort to make technology human was kind of a waste.

We thought maybe you could find more delightful things on the non-human side of the uncanny valley…

And implicit in that I guess was a critique of the mainstream tech drive at the time (around the earliest days of Siri, Google Assistant) around voice interfaces, which was a dominant dream.

A Google VP at the time stated that their goal was to create ‘the star trek computer’.

Our clients really wanted things like this, and we had to point out that voice UIs are great for moving the plot of tv shows along.

I only recently (via the excellent Futurish podcast) learned this term – ‘hyperstition’ – a self-fulfilling idea that becomes real through its own existence (usually in movies or other fictions) e.g. flying cars

And I’d argue we need to be critically aware of them still in our work…

Whatever your position on them, LLMs are in a hyperstitial loop right now of epic proportions.

Disclaimer: I’ve worked on them, I use them. I still try and think critically of them as material…

And while it can feel like we have crossed the uncanny valley there, I think we can still look to the BASAAP thought to see if there’s other paths we can take with these technologies.

My old boss at Google, Blaise Agüera y Arcas has just published this fascinating book on the evolutionary and computational basis of intelligence.

In it he frames our current moment as the start of a ‘symbiosis’ of machine and human intelligence, much as we can see other systems of natural/artificial intelligences in our past – like farming, cities, economies.

There’s so much in there – but this line from an accompanying essay in Nature brings me back to BASAAP. “Their strengths and weaknesses are certainly different from ours” – so why as designers aren’t we exposing that more honestly?

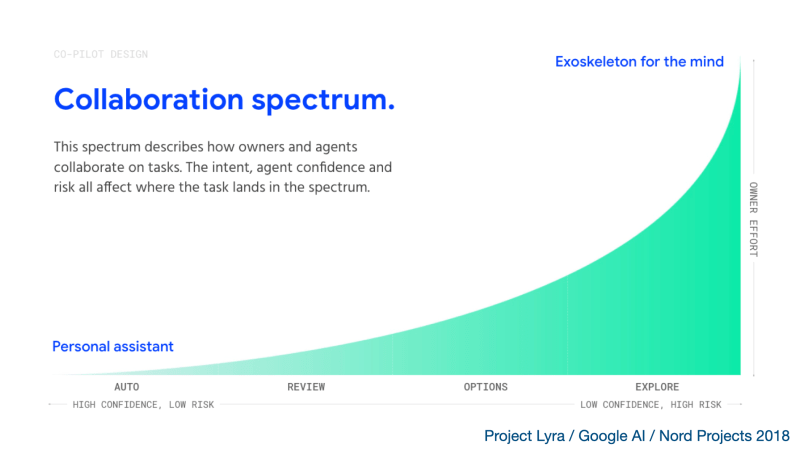

In work I did in Blaise’s group at Google in 2018 we examined some ways to approach this – by explicitly surfacing an AI’s level of confidence in the UX.

Here’s a little mock up of some work with Nord Projects from that time where we imagined dynamic UI that was built by the agent to surface it’s uncertainties to its user – and right up to date – papers published at the launch of Gemini 3 where the promise of generated UI could start to support stuff like that.

And just yesterday this new experimental browser ‘Disco’ was announced by Google Labs – that builds mini-apps based on what it thinks you’re trying to achieve…

But again lets return to that thought about Machine Intelligence having a symbiosis with the human rather than mimicking it…

There could be more useful prompts from the non-human side of the uncanny valley… e.g. Spiders

I came across this piece in Quanta https://www.quantamagazine.org/the-thoughts-of-a-spiderweb-20170523/ some years back about cognitive science experiments on spiders revealing that their webs are part of their ‘cognitive equipment’. The last paragraph struck home – ‘cognition to be a property of integrated nonbiological components’

And… of course…

In Peter Godfrey-Smith’s wonderful book he explores different models of cognition and consciousness through the lens of the octopus.

What I find fascinating is the distributed, embodied (rather than centralized) model of cognition they appear to have – with most of their ‘brains’ being in their tentacles…

I have always found this quote from ETH’s Bertrand Meyer inspiring… No need for ‘brains’!!!

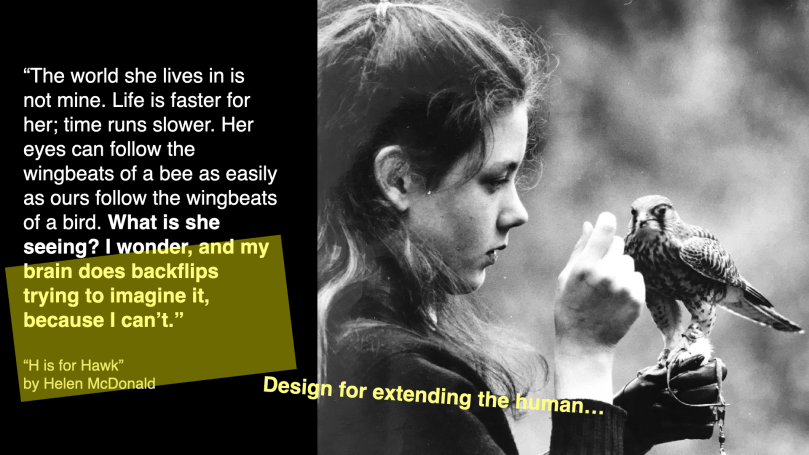

“H is for Hawk” is a fantastic memoir of the relationship between someone and their companion species. Helen McDonald writes beautifully about the ‘her that is not her’

(footnote: I experimented with search-and-replace in her book here back in 2016: https://magicalnihilism.com/2016/06/08/h-is-for-hawk-mi-is-for-machine-intelligence/)

This is CavCam and CavStudio – more work by Nord Projects, with Alison Lentz, Alice Moloney and others in Google Research examining how these personalised trained models could become intelligent reactive ‘lenses’ for creative photography.

We could use AI in creating different complimentary ‘umwelts’ for us.

I’m sure many of you are familiar with Thomas Nagel’s 1974 piece – ‘What is it like to be a bat” – well, what if we can know that?

BAAAAT!!!

This ‘more than human’ approach to design is evident in the room and the zeitgeist for some time now.

We saw it beautifully in Ling Tan and Usman Haque’s work and practice this morning, and of course it’s been wonderfully examined in James Bridle’s writing and practice too.

Perhaps surprising is that the tech world is heading there too perhaps.

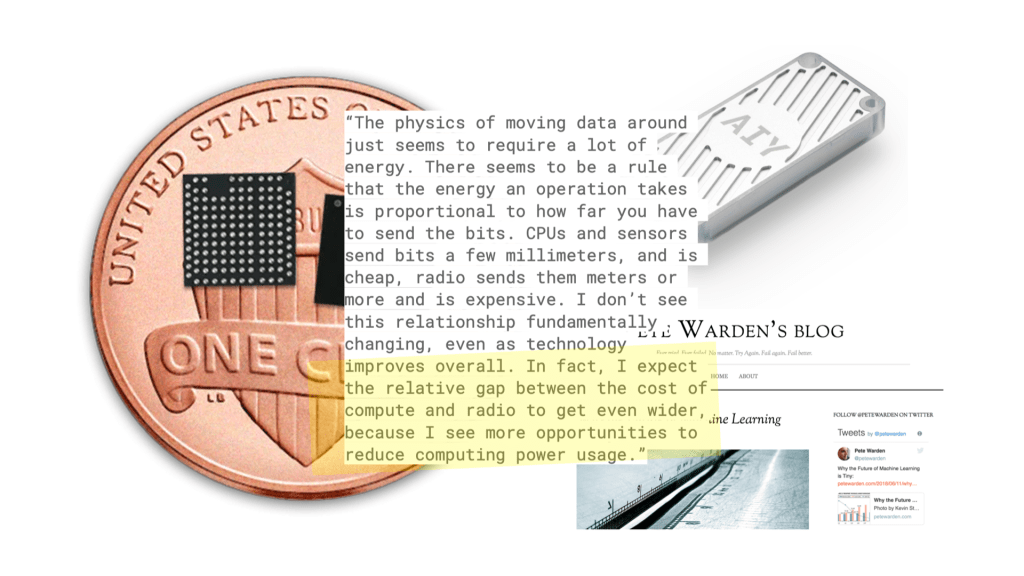

There’s a growing suspicion among AI researchers – voiced at their big event NeurIPS just a weekor so – that the language model will need to be supplanted or at least complemented by other more embodied and physical approaches, including what are getting categorised as “World Models” – playing in the background is video from Google Deepmind’s announcement of the autumn on this agenda.

Fei-Fei Li (one of the godmothers of the current AI boom) has a recent essay on substack exploring this.

“Spatial Intelligence is the scaffolding upon which our cognition is built. It’s at work when we passively observe or actively seek to create. It drives our reasoning and planning, even on the most abstract topics. And it’s essential to the way we interact—verbally or physically, with our peers or with the environment itself.”

Here are some old friends from Google who have started a company – Archetype AI – looking at physical world AI models that are built up from a multiplicity of real-time sensor data…

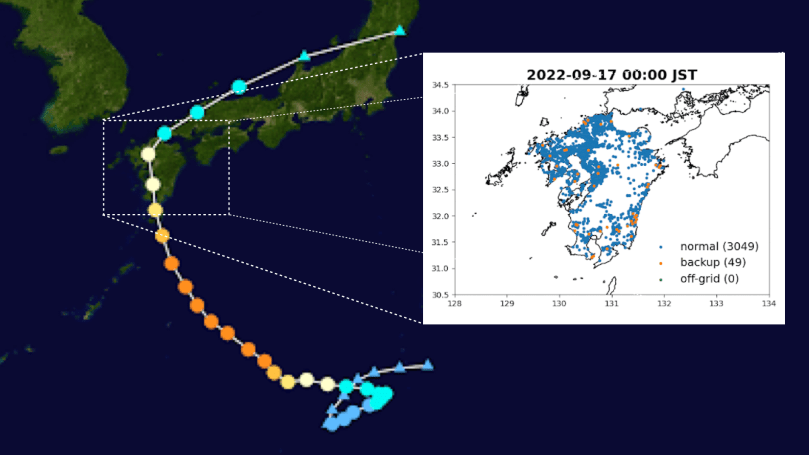

As they mention the electrical grid – here’s some work from my time at solar/battery company Lunar Energy in 2022/23 that can illustrate the potential for such approaches.

In Japan, Lunar have a large fleet of batteries controlled by their Lunar AI platform. You can perhaps see in the time-series plot the battery sites ‘anticipating’ the approach of the typhoon and making sure they are charged to provide effective backup to the grid.

Maybe this is… “What is it like to be a BAT-tery’.

Sorry…

I think we might have had our own little moment of sonic hyperstition there…

So, to wrap up.

This summer I had an experience I have never had before.

I was caught in a wildfire.

I could see it on a map from space, with ML world models detecting it – but also with my eyes, 500m from me.

I got out – driving through the flames.

But it was probably the most terrifying thing that has ever happened to me… I was lucky. I was a tourist. I didn’t have to keep living there.

But as Ling and Usman pointed out – we are in a world now where these types of experiences are going to become more and more common.

And as they said – the only way out is through.

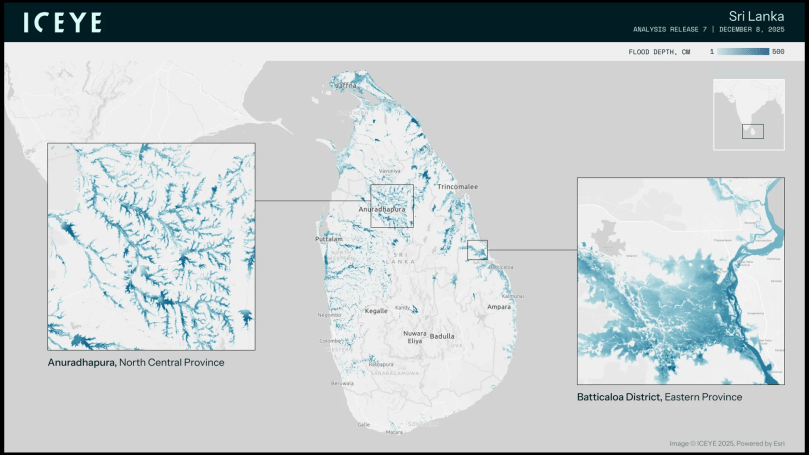

This is an Iceye Gen4 Synthetic Aperture Radar satellite – designed and built in Europe.

Here’s some imagery from this past week they released of how they’ve been helping emergency response to the flooding in SE Asia – e.g. Sri Lanka here with real-time imaging.

But as Ling said this morning – we can know more and more but it might not unlock the regenerative responses we need on its own.

How might we follow their example with these new powerful world modelling technologies?

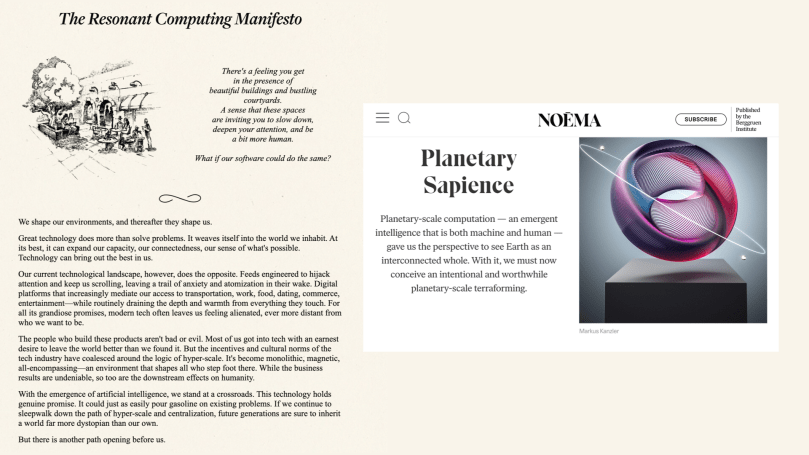

As well as Ling and Usman’s work, responses like the ‘Resonant Computing’ manifesto (disclosure: I’m a co-signee/supporter) and the ‘planetary sapience’ visions of the Antikythera organisation give me hope we can direct these technologies to resize, remix and regenerate our lives and the living systems of the planet.

The AI-assisted permaculture future depicted in Ruthana Emrys’ “A Half-Built Garden” gives me hope.

The rise of bioregional design as championed by the Future Observatory at the Design Museum in London gives me hope.

And I’ll leave you with the symbiotic nature/AI hope of my friends at Superflux and their project that asks, I guess – “What is it like to be a river?”…

THANK YOU.