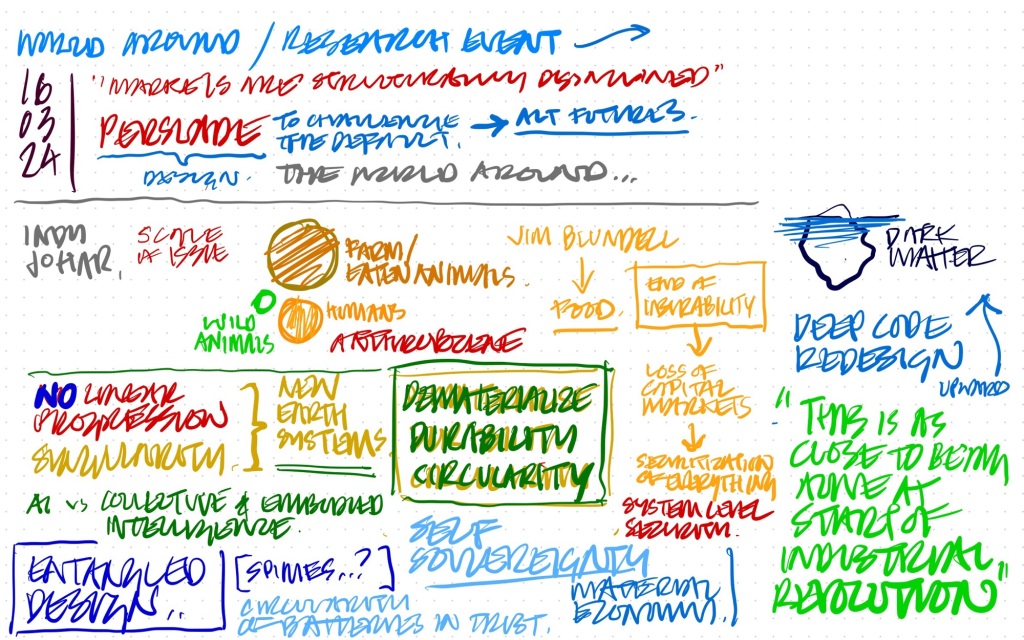

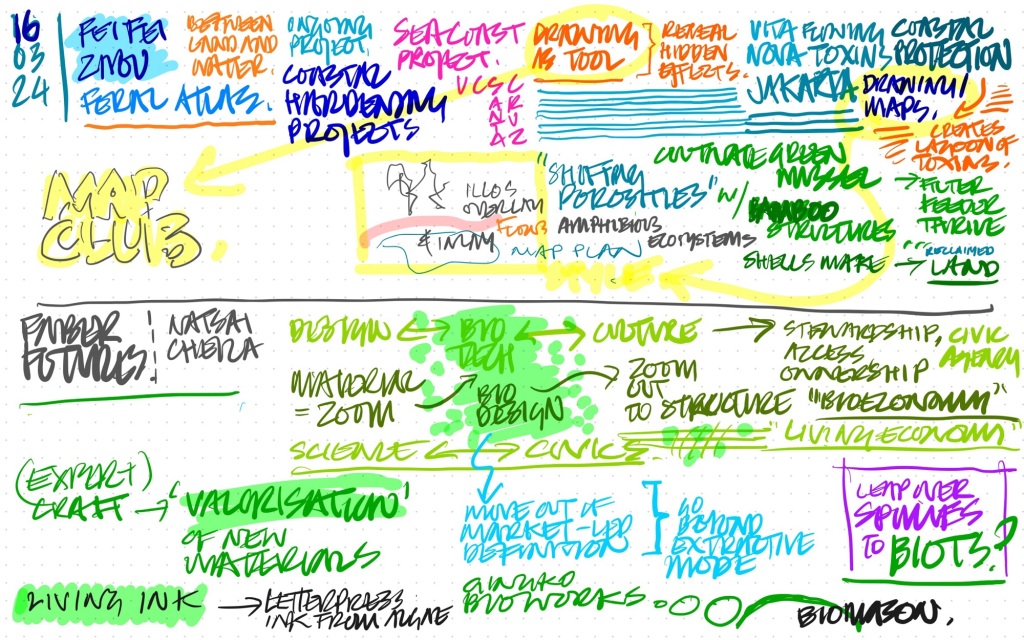

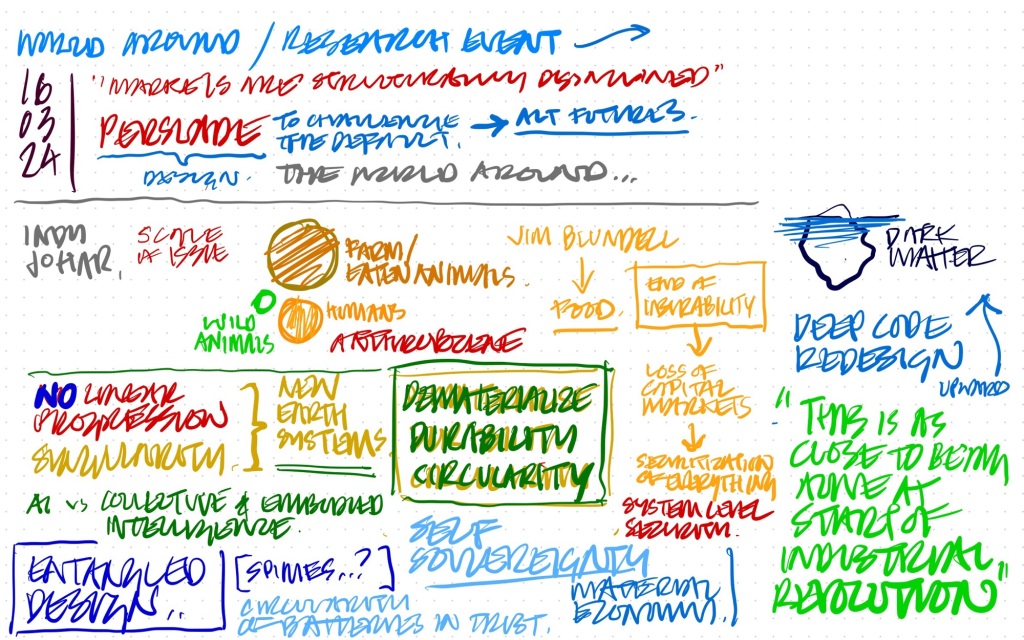

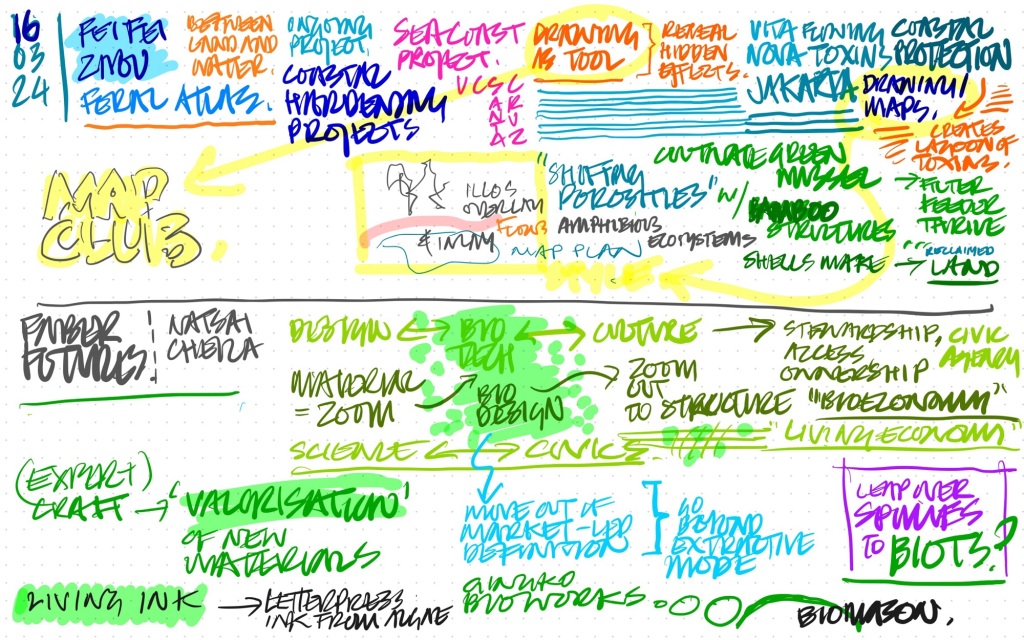

A thought provoking and sometimes heartbreaking event. My note taking trailed off through the event, not a reflection on the presentations other than my being engrossed in them!

A thought provoking and sometimes heartbreaking event. My note taking trailed off through the event, not a reflection on the presentations other than my being engrossed in them!

I had a wonderful experience last week of taking my ten year old daughter to the Goldsmiths BA Design show last week (thanks for the invite, Matt Ward!)

From the moment she walked through the door – her jaw dropped.

There was a delight and surprise that this might be something *they* could do one day.

That this might be what school turns into.

That the young people there who were only maybe a decade older could explore ideas, learn crafts old and new, make things, and ask questions with all that work.

And that they would be kind and clever and generous in answering her questions.

So – for sure, take your kids to the graduation shows, and design schools – maybe have a day where you invite local schools and get your students to explain their work to 10 year olds…?

p.s. Apologies to the students who’s work I have images of, but didn’t capture the author at the time of the show. I will try and get hold of a catalogue and rectify here ASAP!!!

In the introduction to Malcolm McCullough’s #breezepunk classic “Downtime on the Microgrid” he says…

This past week we (Lunar Energy) sponsored a social event for an energy industry conference in Amsterdam.

There were the usual opportunities offered to ‘dress’ the space, put up posters, screens etc etc.

We even got to name a cocktail (“lunar lift-off” i think they called it! I guess moonshots would have been a different kind of party…) – but what we landed on was… coasters…

Paulina Plizga, who joined us earlier this year came up with some lovely playful recycled cardboard coasters – featuring interconnected designs of pieces of a near-future electrical grid (enabled by our Gridshare software, natch) and stats from our experience in running digital, responsive grids so far.

The inspiration for these partly reference Ken Garland’s seminal “Connect” game for Galt toys – could we make a little ‘infinite game’ with coasters as play pieces for the attendees.

If you’ve ever been to something like this – and you’re anything like me – then you might want something to fidget with, help start a conversation… or just be distracted by for a moment! We thought these could also serve a social role in helping the event along, not just keep your drink from marking the furniture!

I was delighted when our colleagues who were attending said Paulina’s designs were a hit – and that they had actually used them to give impromptu presentations on Gridshare to attendees!

So a little bit more playful grid awareness over drinks! What could be better?

Thank you Ken Garland!

There’s a lovely site here with more of Ken’s wonderful work on games and toys for Galt. And here’s Ben’s post about it from a while back where I stole the picture from, as I’m writing this in a cafe and can’t take a picture of my set!

I was fortunate to be invited to the wonderful (huge) campus of TU Delft earlier this year to give a talk on “Designing for AI.”

I felt a little bit more of an imposter than usual – as I’d left my role in the field nearly a year ago – but it felt like a nice opportunity to wrap up what I thought I’d learned in the last 6 years at Google Research.

Below is the recording of the talk – and my slides with speaker notes.

I’m very grateful to Phil Van Allen and Wing Man for the invitation and support. Thank you Elisa Giaccardi, Alessandro Bozzon, Dave Murray-Rust and everyone the faculty of industrial design engineering at TU Delft for organising a wonderful event.

The excellent talks of my estimable fellow speakers – Elizabeth Churchill, Caroline Sinders and John can be found on the event site here.

Hello!

This talk is mainly a bunch of work from my recent past – the last 5/6 years at Google Research. There may be some themes connecting the dots I hope! I’ve tried to frame them in relation to a series of metaphors that have helped me engage with the engineering and computer science at play.

I won’t labour the definition of metaphor or why it’s so important in opening up the space of designing AI, especially as there is a great, whole paper about that by Dave Murray-Rust and colleagues! But I thought I would race through some of the metaphors I’ve encountered and used in my work in the past.

The term AI itself is best seen as a metaphor to be translated. John Giannandrea was my “grand boss” at Google and headed up Google Research when I joined. JG’s advice to me years ago still stands me in good stead for most projects in the space…

But the first metaphor I really want to address is that of the Optometrist.

This image of my friend Phil Gyford (thanks Phil!) shows him experiencing something many of us have done – taking an eye test in one of those wonderful steampunk contraptions where the optometrist asks you to stare through different lenses at a chart, while asking “Is it better like this? Or like this?”

This comes from the ‘optometrist’ algorithm work by colleagues in Google Research working with nuclear fusion researchers. The AI system optimising the fusion experiments presents experimental parameter options to a human scientist, in the mode of a eye testing optometrist ‘better like this, or like this?’

For me to calls to mind this famous scene of human-computer interaction: the photo enhancer in Blade Runner.

It makes the human the ineffable intuitive hero, but perhaps masking some of the uncanny superhuman properties of what the machine is doing.

The AIs are magic black boxes, but so are the humans!

Which has lead me in the past to consider such AI-systems as ‘magic boxes’ in larger service design patterns.

How does the human operator ‘call in’ or address the magic box?

How do teams agree it’s ‘magic box’ time?

I think this work is as important as de-mystifying the boxes!

Lais de Almeida – a past colleague at Google Health and before that Deepmind – has looked at just this in terms of the complex interactions in clinical healthcare settings through the lens of service design.

How does an AI system that can outperform human diagnosis (Ie the retinopathy AI from deep mind shown here) work within the expert human dynamics of the team?

My next metaphor might already be familiar to you – the centaur.

[Certainly I’ve talked about it before…!]

If you haven’t come across it:

Gary Kasparov famously took on chess-AI Deep Blue and was defeated (narrowly)

He came away from that encounter with an idea for a new form of chess where teams of humans and AIs played against other teams of humans and AIs… dubbed ‘centaur chess’ or ‘advanced chess’

I first started investigating this metaphorical interaction about 2016 – and around those times it manifested in things like Google’s autocomplete in gmail etc – but of course the LLM revolution has taken centaurs into new territory.

This very recent paper for instance looks at the use of LLMs not only in generating text but then coupling that to other models that can “operate other machines” – ie act based on what is generated in the world, and on the world (on your behalf, hopefully)

And notion of a Human/AI agent team is something I looked into with colleagues in Google Research’s AIUX team for a while – in numerous projects we did under the banner of “Project Lyra”.

Rather than AI systems that a human interacts with e.g. a cloud based assistant as a service – this would be pairing truly-personal AI agents with human owners to work in tandem with tools/surfaces that they both use/interact with.

And I think there is something here to engage with in terms of ‘designing the AI we need’ – being conscious of when we make things that feel like ‘pedal-assist’ bikes, amplifying our abilities and reach vs when we give power over to what political scientist David Runciman has described as the real worry. Rather than AI, “AA” – Artificial Agency.

[nb this is interesting on that idea, also]

We worked with london-based design studio Special Projects on how we might ‘unbox’ and train a personal AI, allowing safe, playful practice space for the human and agent where it could learn preferences and boundaries in ‘co-piloting’ experiences.

For this we looked to techniques of teaching and developing ‘mastery’ to adapt into training kits that would come with your personal AI .

On the ‘pedal-assist’ side of the metaphor, the space of ‘amplification’ I think there is also a question of embodiment in the interaction design and a tool’s “ready-to-hand”-ness. Related to ‘where the action is’ is “where the intelligence is”

In 2016 I was at Google Research, working with a group that was pioneering techniques for on-device AI.

Moving the machine learning models and operations to a device gives great advantages in privacy and performance – but perhaps most notably in energy use.

If you process things ‘where the action is’ rather than firing up a radio to send information back and forth from the cloud, then you save a bunch of battery power…

Clips was a little autonomous camera that has no viewfinder but is trained out of the box to recognise what humans generally like to take pictures of so you can be in the action. The ‘shutter’ button is just that – but also a ‘voting’ button – training the device on what YOU want pictures of.

There is a neural network onboard the Clips initially trained to look for what we think of as ‘great moments’ and capture them.

It had about 3 hours battery life, 120º field of view and can be held, put down on picnic tables, clipped onto backpacks or clothing and is designed so you don’t have to decide to be in the moment or capture it. Crucially – all the photography and processing stays on the device until you decide what to do with it.

This sort of edge AI is important for performance and privacy – but also energy efficiency.

A mesh of situated “Small models loosely joined” is also a very interesting counter narrative to the current massive-model-in-the-cloud orthodoxy.

This from Pete Warden’s blog highlights the ‘difference that makes a difference’ in the physics of this approach!

And I hope you agree addressing the energy usage/GHG-production performance of our work should be part of the design approach.

Another example from around 2016-2017 – the on-device “now playing” functionality that was built into Pixel phones to quickly identify music using recognisers running purely on the phone. Subsequent pixel releases have since leaned on these approaches with dedicated TPUs for on-device AI becoming selling points (as they have for iOS devices too!)

And as we know ourselves we are not just brains – we are bodies… we have cognition all over our body.

Our first shipping AI on-device felt almost akin to these outposts of ‘thinking’ – small, simple, useful reflexes that we can distribute around our cyborg self.

And I think this approach again is a useful counter narrative that can reveal new opportunities – rather than the centralised cloud AI model, we look to intelligence distributed about ourselves and our environment.

A related technique pioneered by the group I worked in at Google is Federated Learning – allowing distributed devices to train privately to their context, but then aggregating that learning to share and improve the models for all while preserving privacy.

This once-semiheretical approach has become widespread practice in the industry since, not just at Google.

My next metaphor builds further on this thought of distributed intelligence – the wonderful octopus!

I have always found this quote from ETH’s Bertrand Meyer inspiring… what if it’s all just knees! No ‘brains’ as such!!!

In Peter Godfrey-Smith’s recent book he explores different models of cognition and consciousness through the lens of the octopus.

What I find fascinating is the distributed, embodied (rather than centralized) model of cognition they appear to have – with most of their ‘brains’ being in their tentacles…

And moving to fiction, specifically SF – this wonderful book by Adrian Tchaikovsky depicts an advanced-race of spacefaring octopi that have three minds that work in concert in each individual. “Three semi-autonomous but interdependent components, an “arm-driven undermind (their Reach, as opposed to the Crown of their central brain or the Guise of their skin)”

I want to focus on the that idea of ‘guise’ from Tchaikovsky’s book – how we might show what a learned system is ‘thinking’ on the surface of interaction.

We worked with Been Kim and Emily Reif in Google research who were investigating interpretability in modest using a technique called Tensor concept activation vectors or TCAVs – allowing subjectivities like ‘adventurousness’ to be trained into a personalised model and then drawn onto a dynamic control surface for search – a constantly reacting ‘guise’ skin that allows a kind of ‘2-player’ game between the human and their agent searching a space together.

We built this prototype in 2018 with Nord Projects.

This is CavCam and CavStudio – more work using TCAVS by Nord Projects again, with Alison Lentz, Alice Moloney and others in Google Research examining how these personalised trained models could become reactive ‘lenses’ for creative photography.

There are some lovely UI touches in this from Nord Projects also: for instance the outline of the shutter button glowing with differing intensity based on the AI confidence.

Finally – the Rubber Duck metaphor!

You may have heard the term ‘rubber duck debugging’? Whereby your solve your problems or escape creative blocks by explaining out-loud to a rubber duck – or in our case in this work from 2020 and my then team in Google Research (AIUX) an AI agent.

We did this through the early stages of covid where we felt keenly the lack of informal dialog in the studio leading to breakthroughs. Could we have LLM-powered agents on hand to help make up for that?

And I think that ‘social’ context for agents in assisting creative work is what’s being highlighted here by the founder of MidJourney, David Holz. They deliberated placed their generative system in the social context of discord to avoid the ‘blank canvas’ problem (as well as supercharge their adoption) [reads quote]

But this latest much-discussed revolution in LLMs and generative AI is still very text based.

What happens if we take the interactions from magic words to magic canvases?

Or better yet multiplayer magic canvases?

There’s lots of exciting work here – and I’d point you (with some bias) towards an old intern colleague of ours – Gerard Serra – working at a startup in Barcelona called “Fermat”

So finally – as I said I don’t work at this as my day job any more!

I work for a company called Lunar Energy that has a mission of electrifying homes, and moving us from dependency on fossil fuels to renewable energy.

We make solar battery systems but also AI software that controls and connects battery systems – to optimise them based on what is happening in context.

For example this recent (September 2022) typhoon warning in Japan where we have a large fleet of batteries controlled by our Gridshare platform.

You can perhaps see in the time-series plot the battery sites ‘anticipating’ the approach of the typhoon and making sure they are charged to provide effective backup to the grid.

And I’m biased of course – but think most of all this is the AI we need to be designing, that helps us at planetary scale – which is why I’m very interested by the recent announcement of the https://antikythera.xyz/ program and where that might also lead institutions like TU Delft for this next crucial decade toward the goals of 2030.

Back in April 2022, I was invited to speak as part of CIID’s Open Lecture series on my career so far (!) and what I’m working on now at Moixa.com.

Naturally, It ends up talking about trying to reframe the energy transition / climate emergency from a discourse of ‘sustainability’ to one of ‘abundance’ – referencing Russian physicists and Chobani yoghurt.

Thank you so much to Simona, Alie and the rest of the crew for hosting – was a great audience with a lot of old friends showing up which was lovely (not that they spared the hard questions…).

I was on vacation at the time with minimal internet, so I ended up pre-recording the talk – allowing me the novelty of being able to heckle myself in the zoom chat…

Hello, it’s very nice to be “here”!

Thank you CIID for inviting me.

I’ll explain this silly title later, but for now let me introduce myself…

Simona and Alie asked me to give a little talk about my career, which sent me into a spiral of mortality and desperation of course. I’ve been doing whatever I do for a long time now. And the thing is I’m not at all sure that matters much.

I’m 50 this year, you see. I’m guessing everyone has some understanding of Moore’s Law by now – things get more powerful, cheaper and smaller every 18mo-2yrs or so. I thought about what that means for what I’ve done for the last 50 years. Basically everything I have worked on has changed a million-fold since I started working on it (not getting paid for it!)

If you’re a designer that works in, say, furniture or fashion – these effects are felt peripherally: maybe in terms of tools or techniques but not at the core of what you do. I don’t mean to pick on Barber Ogersby here, but they kind of started the same time as me. You get to do deep, good work in a framework of appreciation that doesn’t change that much. I get the sense that even this has changed radically in the last few years as well – for many good reasons.

Anyway – when I was asked to look back on work from BERG days over a decade ago, it’s hard to pretend it matters in the same way as when you did it. But perhaps it matters in different ways? I’m trying here to look for those threads and ideas which might still be useful.

In design for tech we are building on shifting (and short-circuiting) pace layers. I’ve always found it most useful to think them as connected, rather than separate. Slowly permeable cell membranes/semiconducting layers. New technology is often the wormhole or short-circuit across them.

So with that said, to BERG.

BERG was a studio formed out of a partnership between Matt Webb and Jack Schulze. Tom Armitage joined – and myself shortly after. From there we grew to a small product invention and design consultancy of about 15 folks at our largest, but always around 8-9. It was a great size – I’m still proud of the stuff we took on, and the way we did it.

One of the central tenets of BERG: All you can see of systems are surfaces. The complexity and interdependency of the modern world is not evident. And you can make choices as designers about how to handle that. Most design orthodoxy (at the time) and now is to drive towards ‘seamlessness’ – but we preferred Mark Weizer’s exhortation for ‘beautiful seams’ that would increase the legibility of systems while still making attractive and easy to engage surfaces…

Another central tenet of the studio: “What got just got cheap and boring?” We looked to mass produced toys and electronics, rather than solely to the cutting edge for inspiration. Understanding what had passed through the ‘hype curve’ of the tech scene into what Gartner called ‘the trough of disillusionment’. This felt like the primordial soup of invention.

We got called sci-fi. Design fiction. I don’t think we were Sci-fi. We were more like 18thC naturalists, trying to explain something we were in, to ourselves. think we were more Brian Arthur than Arthur C. Clarke. We didn’t want to see tech as magic.

Brian Arthur’s book “The Nature of Technology” was a huge influence on me at the time (and continues to be.) An economist and scholar of network effects, he tries to establish how and why technology evolves and builds in value. In the book he explains how diverse ‘assemblages’ of scientific and engineering phenomenon combine into new inventions. The give and take between human/cultural needs and emergent technical phenomenon felt far more compelling and inspiring than the human centred design orthodoxy at the time.

This emphasis on exploring phenomena and tech as a path to invention we referred to as ‘material exploration’ as a phase of every studio project. We led with it, privileged it in the way contemporaries emphasised user research – sometimes to our detriment! But the studio was a vehicle for this kind of curiosity – and it’s what powered it.

“Lamps” was a very material-exploration heavy project for Google Creative Lab in 2010. It was early days for the commoditisation of computer vision, and also about the time that Google Glass was announced. We pushed this work to see what it would be like to have computer vision in the world *with you* as an actor rather than privatised behind glasses.

The premise was instead of computer vision reporting back to a personal UI, it would act in the world as a kind of projected ‘smart light’, that would have agency based on your commands.

To make these experiments we had to build a rig. An example here of how the pace-layers of past design work get short-circuited. Here’s our painstaking 2010, 10x the size, cost, pain DIY version of ARKit… which would come along only a few years later.

This final piece takes the smart light idea to a ‘speculative design’ conclusion. What if we made very dumb interactive blocks that were animated with ‘smart light’ computation from the cloud… There’s a little bit of a critical thought in here, but mainly we loved how strange and beautiful this could look!

We also treated data as a material for exploration. One of the projects I’m always proudest of was Schooloscope in 2009 (one of the first BERG studio projects for Channel4 in the UK) – led by Matt Webb, Matt Brown and Tom Armitage which did a fantastic job of reframing contentious school performance data from a cold emphasis on academic performance to a much more accessible and holistic presentation of a school for parents (and kids) to access. Each school’s performance data creates an avatar based on our predisposition to interpret facial expression (after Chernoff)

Another example of play – Suwappu was an exploration for a toy/media franchise for Dentsu. Each toy has an AR environment coded to it, and weekly updates to the the storylines and interactions between them was envisaged. Again a metaverse in… reverse?

A lot of the studio work we couldn’t talk about publicly or release. I don’t think I’ve ever shown this before for instance, which was work we did for Intel looking at the ‘connected car’ and how it might relate to digital technology in cities and people’s pockets.

A lot of it was video prototyping work – provocations and anticipated directions that Intel’s advance design group could show to device and car manufacturers alike – to sell chips!

We deployed our usual bag of tools here – and came up with some interesting speculations – for instance thinking about the whole car as interface in response to the emerging trend of the time of larger and larger touchscreen UI in the car (which I still think is dangerous/wrong!!!)

Here’s another example of smart light – computer vision and projection in one product: an inspection lamp that makes the inscrutable workings of the modern car legible to the owner.

Something we wrote as part of a talk back then.

I guess we were metaverse-averse before there was a metaverse (there still isn’t a metaverse)

I left BERG in 2013 – it stopped doing consulting and for a year it continued more focussed on it’s IoT product platform ‘bergcloud’ and Little Printer product.

In 2014 it shut up shop, which was marked by some nice things like this from William Gibson. Everyone went on to great things! Apple, Microsoft – and starting innovative games studio Playdeo for instance. In my case, I went to work for Google…

So in 2013 I moved to NYC and started to work for Google Creative Lab – whom I’d first met working on the Lamps project. There I did a ton of concept and product design work which will never see the light of day (unfortunately) but also worked on a couple of things that made it out into the world.

Creative Lab was part of the marketing wing of Google rather than the engineering group – so we worked often on pieces that showed off new products or tech – and also connected to (hopefully) worthy projects out in the world.

This piece called Spacecraft For All was a kind of interactive documentary of a group looking to salvage and repurpose a late 1970s NASA probe into an open-access platform for citizen science.

It also got to show off how Chrome could combine video and webGL in what we thought was a really exciting way to explain stuff.

Another project I’m still pretty proud of from this period is Project Sunroof – a tool conceived of by google engineers working on search and maps to calculate the solar potential of a roof, and then connect people to solar installers.

We worked on the branding, interface and marketing of the service, which still exists in the USA. There were a number of other energy initiatives I worked on inside Google at the time – which was a much more curious (and hubristic!) entity back in the Larry/Sergey days – for good and for ill.

One last project from Google – by this time (2016) I’d moved from Creative Lab to Google Research, working with a group that was pioneering techniques for on-device AI. Moving the machine learning models and operations to a device gives great advantages in privacy and performance – but perhaps most notably in energy use. If you process things ‘where the action is’ rather than firing up a radio to send information back and forth from the cloud, then you save a bunch of battery power…

Clips is a little autonomous camera that has no viewfinder but is trained out of the box to recognise what humans generally like to take pictures of so you can be in the action. The ‘shutter’ button is just that – but also a ‘voting’ button – training the device on what YOU want pictures of.

Along with Clips, the ‘now playing’ audio recognition, many camera features in pixel phones and local voice recognition all came from this group. I thought of these ML-powered features not as the ‘brain-centered’ AI we think of from popular culture but more like the distributed, embodied neurons we have in our knees, stomach etc.

At the beginning of this year I left Google and went to work for Moixa. Moixa is a energy tech company that builds HW and SW to help move humanity to 100% clean energy. More about them later!

Instead of overlaying Moore’s Law on this step of my career, instead another graph of an all-together less welcome progression. This is Professor Ed Hawkin’s visualisation of how the world has warmed from 1850 to 2018.

I’ve been thinking a lot – both prior to and since joining Moixa about design’s role in helping with the transition to clean energy. And I think something that Matt Webb often talked about back in BERG days about product invention has some relevance.

And we all love a 2×2, right?

He related this story that he in turn had heard (sorry I don’t have a great scholarly citation here) about there being 4 types of product: Fear, Despair, Hope and Greed products.

Fear products are things you buy to stop terrible things happening, Despair product you have to buy – energy, toilet paper… Greed products might get you and advantage in life, or make you richer somehow (investments, MBAs…) but Hope products speak to something aspirational in us.

What might this be for energy?

You probably thought I was going to reference The ministry for the Future by KSR, but hopefully I surprised you with Family Guy! It’s creator, Seth Macfarlane went on to create the optimistic, love-letter to Star Trek called “The Orville” and has this to say:

“Dystopia is good for drama because you’re starting with a conflict: your villain is the world. Writers on “Star Trek: The Next Generation” found it very difficult to work within the confines of a world where everything was going right. They objected to it. But I think that audiences loved it. They liked to see people who got along, and who lived in a world that was a blueprint for what we might achieve, rather than a warning of what might happen to us.” – I think we can say the same for the work of design.

I’m going to read this quote from Kim Stanley Robinson. It’s long but hopefully worth it.

“It’s important to remember that utopia and dystopia aren’t the only terms here. You need to use the Greimas rectangle and see that utopia has an opposite, dystopia, and also a contrary, the anti-utopia. For every concept there is both a not-concept and an anti-concept. So utopia is the idea that the political order could be run better. Dystopia is the not, being the idea that the political order could get worse. Anti-utopias are the anti, saying that the idea of utopia itself is wrong and bad, and that any attempt to try to make things better is sure to wind up making things worse, creating an intended or unintended totalitarian state, or some other such political disaster. 1984 and Brave New World are frequently cited examples of these positions. In 1984 the government is actively trying to make citizens miserable; in Brave New World, the government was first trying to make its citizens happy, but this backfired. … it is important to oppose political attacks on the idea of utopia, as these are usually reactionary statements on the behalf of the currently powerful, those who enjoy a poorly-hidden utopia-for-the-few alongside a dystopia-for-the-many. This observation provides the fourth term of the Greimas rectangle, often mysterious, but in this case perfectly clear: one must be anti-anti-utopian.”

I’ve been reading a lot of solar punk lately in search of such utopias. But – The absolute best vision of a desirable future I have seen in recent years has not come form a tech company, or a government – but a Yoghurt maker. This is the design of the future as a hope product.

“We worked closely with Chobani to realise their vision of a world worth fighting for. It’s not a perfect utopia, but a version of a future we can all reach if we just decide to put in the work. We love the aspiration in Chobani’s vision of the future and hope it will sow the seeds of optimism and feed our imagination for what the future could be. It’s a vision we can totally get behind. We couldn’t be more happy to be part of this campaign.” – The Line

In 1964 a physicist named Nikolai Kardashev proposed a speculative scale or typology of civilisations, based on their ability to harness energy.

Humans are currently at around .7 on the scale.

A Type I civilization is usually defined as one that can harness all the energy that reaches its home planet from its parent star (for Earth, this value is around 2×10^17 watts), which is about four orders of magnitude higher than the amount presently attained on Earth, with energy consumption at ≈2×10^13 watts as of 2020.

So, four orders of magnitude more energy is possible just from the solar potential of Earth.

A Type 1 future could be glorious. A protopia.

At Moixa we make something that we hope is a building block of something like this – solar energy storage batteries that can be networked together with software to create virtual power plants, that can replace fossil fuels. It’s one part of our mission to create 100% electric homes this decade.

The home is a place where design and desire become important for change. I hope we can make energy transition in the home something that is aspirational and accessible with good design.

I’ve also been very inspired by Saul Griffith’s book “Electrify” – please go read it at once! It points out a ton of design and product opportunity over the coming decade to move to clean, electric-powered lives.

As he says:

“I think our failure on fixing climate change is just a rhetorical failure of imagination. We haven’t been able to convince ourselves that it’s going to be great. It’s going to be great.”

– Saul Griffith

I’ll finish with a couple more quotes:

“If we can make it through the second half of this century, there’s a very good chance that what we’ll end up with is a really wonderful world”

Jamais Cascio

“An adequate life provided for all living beings is something the planet can still do; it has sufficient resources, and the sun provides enough energy. There is a sufficiency, in other words; adequacy for all is not physically impossible. It won’t be easy to arrange, obviously, because it would be a total civilizational project, involving technologies, systems, and power dynamics; but it is possible. This description of the situation may not remain true for too many more years, but while it does, since we can create a sustainable civilization, we should. If dystopia helps to scare us into working harder on that project, which maybe it does, then fine: dystopia. But always in service to the main project, which is utopia.”

Kim Stanley Robinson

Matt Jones: Jumping to the End — Practical Design Fiction from Interaction Design Association on Vimeo.

This talk summarizes a lot of the approaches that we used in the studio at BERG, and some of those that have carried on in my work with the gang at Google Creative Lab in NYC.

Unfortunately, I can’t show a lot of that work in public, so many of the examples are from BERG days…

Many thanks to Catherine Nygaard and Ben Fullerton for inviting me (and especially to Catherine for putting up with me clowning around behind here while she was introducing me…)

The session I staged at FooCamp this year was deliberately meant to be a fun, none-too-taxing diversion at the end of two brain-baking days.

It was based on (not only a quote from BSG) but something that Matt Biddulph had said to me a while back – possibly when we were doing some work together at BERG, but it might have been as far-back as our Dopplr days.

He said (something like) that a lot of the machine learning techniques he was deploying on a project were based on 1970s Computer Science theory, but now the horsepower required to run them was cheap and accessible in the form of cloud computing service.

This stuck with me, so for the Foo session I hoped I could aggregate a list people’s favourite theory work from the 20thC which now might be possible to turn into practice.

It didn’t quite turn out that way, as Tom Coates pointed out in the session – about halfway through, it morphed into a list of the “prior art” in both fiction and academic theory that you could identify as pre-cursors to current technological preoccupation or practice.

Nether the less it was a very fun way to spend an sunny sunday hour in a tent with a flip chart and some very smart folks. Thanks very much as always to O’Reilly for inviting me.

Below is my photo of the final flip charts full of everything from Xanadu to zeppelins…

A quote I used in Dan Saffer’s session on smart devices using data collection to attempt predictions around what their users might want: “Today’s devices blurt out the absolute truth as they know it. A smart device in the future might know when NOT to blurt out the truth.” – Genevieve Bell Also got to […]

If only more conference speakers felt this way…

I give very few talks about anything. I am terrible at knowing what I know. I assume that most people in the audience of any conference I attend will know more than me about anything I could talk about. For similar reasons, I’m no good at thinking of things I could write about for magazines. You all know what I know.

It turns out that I need to run a website on a very specialised topic for eight years before I’m in a position to feel confident talking about it. This may be a little extreme.

Probably something I should bear in mind.

“More hammering, less yammering” as Bleecker puts it.

John Thackara writes in his always-excellent Doors Of Perception newsletter, that he may have finally squared-the-circle of the environmental impact of travelling to events to speak about environmental impacts…

After years traveling the world in airplanes to speak at sustainability events, my low-emission online alternative is now available. In recent weeks I was compelled by a family matter to substitute my physical presence with a virtual one in Austria, China, Canada, the USA, and Brazil (Curitiba and Rio). These online encounters have a simple format: I make a customized-for-you 20 minute pre-recorded talk, which is downloaded in advance; this film is then shown at an event; this is followed by a live conversation between me and your group via Skype or POTS (Plain Old Telephone Service). The films are neither fancy nor glossy, but this simple combination seems to work well.

It occurs to me though, that part of the pleasure – and reward – of travel to conferences (apart from the well-documented serendipity of what happens outside of the scheduled sessions) is the chance to visit a new city and experience it’s culture.

Often, if you are lucky, this is in the company of locals that you have met at the conference, who will show you ‘their’ city rather than the official version.

I wonder if John has considered asking the those locals at the conferences he will ‘attend’ via video, to send him back a 20 minute customised-for-him film of their city or town?

Might work nicely, no?